Artificial Intelligence seems destined to change the world. But it needs to get its act together first or there may be hell to pay, writes one of two Joe Laurias.

By Joe Lauria (Not the Weatherman)

Special to Consortium News

There is no way to reverse the emergence of Artificial Intelligence on its way to dominating our lives. From customer service to censorship and war, AI is making its mark, and the potential for disaster is real.

There is no way to reverse the emergence of Artificial Intelligence on its way to dominating our lives. From customer service to censorship and war, AI is making its mark, and the potential for disaster is real.

The amount of face-to-face contact, or even human voice-to-voice interaction on the phone when doing business has been declining for years and is getting worse with AI. But AI is doing far more than just erode community, destroy jobs and enerverate people waiting on hold.

AI is increasingly behind decisions social media companies make on which posts to remove because they violate some vague “community” standards (as it destroys community), when it’s obvious that AI is programed to weed out dissident political messages.

AI also makes decisions on who to suspend or ban from a social media site, and seems to also evaluate “appeals” to suspensions, which in many cases would be overturned if only a pair of human eyes were applied.

Facebook founder Mark Zuckerberg admitted this week that, “We built a lot of complex systems to moderate content, but the problem with complex systems is they make mistakes.” Facebook admits it used AI systems to remove users’ posts.

“Even if they accidentally censor just 1% of posts, that’s millions of people, and we’ve reached a point where it’s just too many mistakes and too much censorship,” Zuckerberg said.

The absurd lengths to which AI is being applied includes a “think tank” in Washington that is being totally run by Artificial Intelligence. Drop Site News reports on the Beltway Grid Policy Centre that has no office address.

“Beltway Grid’s lack of a physical footprint in Washington — or anywhere else on the earthly plane of existence — stems from more than just a generous work-from-home policy. The organization does not appear to require its employees to exist at all,” the site writes. Yet it churns out copious amounts of reports, press releases, and pitches to reporters.

While this may be an extreme use of AI, the more usual interaction with the public is plagued by another problem: how wrong AI can be.

Still Quite Experimental

AI is clearly still in an experimental stage and even though significant mistakes are inevitable, they have not slowed its application. The errors range from the mundane to the ridiculous to the dangerous.

I recently discovered an example of the ridiculous.

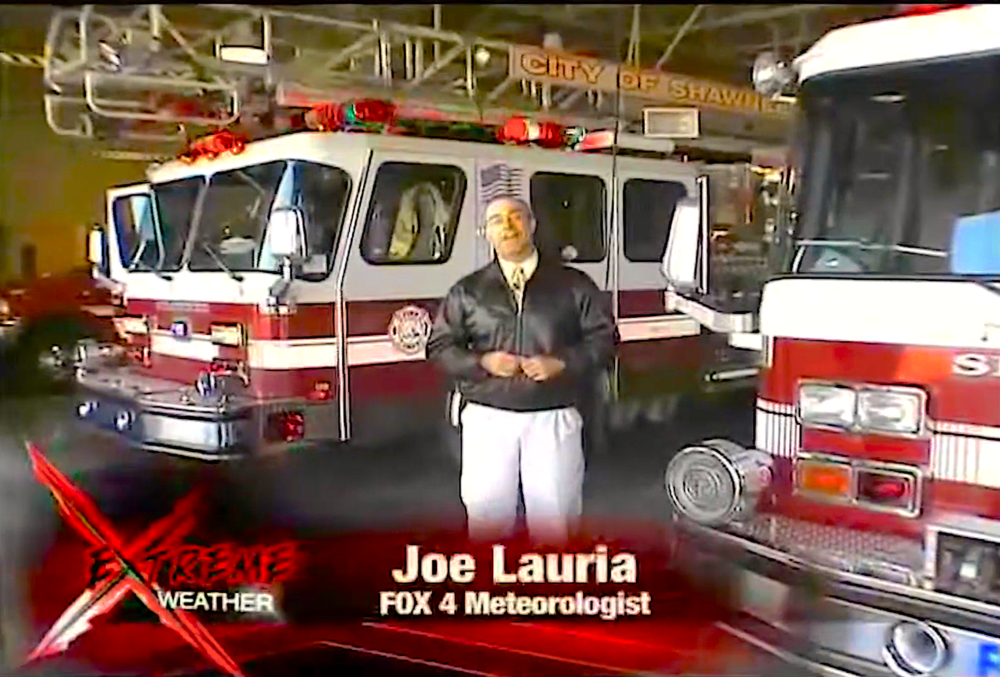

Anyone who searches Google for their online profile is bound to find other people with their exact name. I’ve long been aware of Joe Lauria, a TV weatherman for the Fox affiliate in Kansas City, Missouri.

We have been confused before. Just two weeks ago, I received an email from a Fox viewer in the Kansas City area praising Lauria for being so well dressed and asking me for advice on where to buy a suit. I was curious who else might be confused, so I asked Grok, Elon Musk’s much touted AI system, who Joe Lauria is.

This is the response I got:

“Joe Lauria is a multifaceted professional known primarily for his work in journalism and meteorology. Here’s an overview of his career based on available information:

Journalism: Joe Lauria has been a foreign affairs journalist based at the United Nations since 1990. He has contributed to numerous publications, including the Boston Globe, the London Daily Telegraph, the Johannesburg Star, the Montreal Gazette, the Wall Street Journal, the New York Times, the Washington Post, Salon.com, and The Guardian.

He has also been an investigative reporter for The Sunday Times of London and Bloomberg News. Lauria is currently the editor-in-chief of Consortium News, where he writes on international affairs with a focus on the Middle East. He has co-authored books with notable figures like former U.S. Senator Mike Gravel, focusing on U.S. foreign policy and militarism.

Meteorology: Joe Lauria is an award-winning meteorologist. He has been working as the evening news meteorologist for FOX4 in Kansas City since January 1995, broadcasting weather forecasts every weeknight at 5, 6, 9, and 10 p.m. His approach to meteorology includes educating viewers on aspects of weather not commonly found on standard weather apps.

Prior to his role at FOX4, he served as Chief Meteorologist at KOSA-TV in Midland, Texas, and has worked in various other locations like Orlando, Florida, and Kansas City, Missouri. He has been recognized for his work by receiving the Seal of Approval from both the American Meteorological Society (AMS) and the National Weather Association (NWA). Lauria also holds the Certified Broadcast Meteorologist (CBM) designation from the AMS.

- Personal Life: Joe Lauria was born in New Rochelle, New York, and currently resides in Olathe, Kansas with his wife, Jaye Lynn, and their two cats. He enjoys Italian restaurants, golf, and has been involved in community service through weather seminars and speeches.

Joe Lauria’s dual career in journalism and meteorology highlights his diverse interests and contributions to public understanding and safety through weather reporting and insightful reporting on international affairs.”

Just for the record, I don’t have any cats, I don’t play golf and I wasn’t born in New Rochelle (but not that far away in the Bronx). Of course there is a lot of humor in this. (It’s also funny that I entered university undecided whether to become a journalist or a meteorologist. Then I saw how much math is involved in meteorology).

The potential for such nonsense in a system that seems to be gradually taking over the world is not always a laughing matter, however. It’s troubling that Grok’s man-made intelligence assumed two people are one, rather than just admitting there are several people with the same name.

On the other hand, Chat GPT gave an impressive and incisive, politically neutral dissertation on my work in answer to the question “Who is Joe Lauria?” It was almost as if I’d hired it to be my PR agent. The essay sounds like Chat GPT spent months reading everything I’d written, when it was generated in seconds. There was no sign of the Kansas City weatherman, either.

However when I delved a bit deeper into its “knowledge” of me, it made things up out of thin air. When I asked it what books I’d written, instead of naming the books I actually wrote, it came up with a completely fictional title of a supposed non-fiction book: The Assange Agenda: The Legacy of the Wikileaks Founder. It even assumed a publisher: Clarity Press.

No such book exists. Based on its knowledge of my reporting on Julian Assange it made a ridiculous guess about an imaginary book it thought I must have written. In short, a lot of AI is BS.

AI & War

The damage in Gaza. (Naaman Omar\Palestinian News & Information Agency, Wafa, for APAimages, Wikimedia Commons, CC BY-SA 3.0)

As ridiculous as these results are, as frustrating as humanless interactions are becoming in customer service such as appealing social media posts removed by AI, and as many jobs that are being lost to AI, the more harrowing concern about Artificial Intelligence is its use in the conduct of war.

In other words, what happens when AI errors move from the innocuous and comical to matters of life and death?

Time magazine reported in December on Israel’s use of AI to kill civilians in its genocide in Gaza:

“A program known as ‘The Gospel’ generates suggestions for buildings and structures militants may be operating in. ‘Lavender’ is programmed to identify suspected members of Hamas and other armed groups for assassination, from commanders all the way down to foot soldiers. ‘Where’s Daddy?’ reportedly follows their movements by tracking their phones in order to target them—often to their homes, where their presence is regarded as confirmation of their identity. The air strike that follows might kill everyone in the target’s family, if not everyone in the apartment building.

These programs, which the Israel Defense Force (IDF) has acknowledged developing, may help explain the pace of the most devastating bombardment campaign of the 21st century …”

The Israeli magazine +972 and The Guardian broke the story in April, reporting that up to 37,000 targets had been selected by AI (it should be much higher since April), streamlining a process that before would involve human analysis and a legal authorization before a bomb could be dropped.

Lavender became relied upon under intense pressure to drop more and more bombs on Gaza. The Guardian reported:

“’We were constantly being pressured: ‘Bring us more targets.’ They really shouted at us,’ said one [Israeli] intelligence officer. ‘We were told: now we have to fuck up Hamas, no matter what the cost. Whatever you can, you bomb.’

To meet this demand, the IDF came to rely heavily on Lavender to generate a database of individuals judged to have the characteristics of a PIJ [Palestinian Islamic Jihad] or Hamas militant. […]

After randomly sampling and cross-checking its predictions, the unit concluded Lavender had achieved a 90% accuracy rate, the sources said, leading the IDF to approve its sweeping use as a target recommendation tool.

Lavender created a database of tens of thousands of individuals who were marked as predominantly low-ranking members of Hamas’s military wing,”

Ninety percent is not an independent evaluation of its accuracy, but the IDF’s own assessment. Even if we use that, it means that out of every 100 people Israel targets using this system, at least 10 are completely innocent by its own admission.

But we’re not talking about 100 individuals targeted, but “tens of thousands.” Do the math. Out of every 10,000 targeted 1,000 are innocent victims, acceptable to be killed by the IDF.

In April last year, Israel essentially admitted that 3,700 innocent Gazans had been killed because of its AI. That was eight months ago. How many more have been slaughtered?

The National newspaper out of Abu Dhabi reported:

“Technology experts have warned Israel‘s military of potential ‘extreme bias error’ in relying on Big Data for targeting people in Gaza while using artificial intelligence programmes. […]

Israel’s use of powerful AI systems has led its military to enter territory for advanced warfare not previously witnessed at such a scale between soldiers and machines.

Unverified reports say the AI systems had ‘extreme bias error, both in the targeting data that’s being used, but then also in the kinetic action’, Ms Hammond-Errey said in response to a question from The National. Extreme bias error can occur when a device is calibrated incorrectly, so it miscalculates measurements.

The AI expert and director of emerging technology at the University of Sydney suggested broad data sets ‘that are highly personal and commercial’ mean that armed forces ‘don’t actually have the capacity to verify’ targets and that was potentially ‘one contributing factor to such large errors’.

She said it would take ‘a long time for us to really get access to this information’, if ever, ‘to assess some of the technical realities of the situation’, as the fighting in Gaza continues.”

Surely the IDF’s AI must be more sophisticated than commercially available versions of AI for the general public, such as Grok or ChatGPT. And yet the IDF admits there’s at least a 10 percent error rate when it comes to deciding who should live and who should die.

For what it’s worth, ChatGPT, one of the most popular, says the dangers of errors in the Lavender system are:

- “Bias in data: If Lavender is trained on data that disproportionately comes from certain sources, it could lead to biased outcomes, such as misidentifying the behavior of specific groups or misjudging certain types of signals.

- Incomplete or skewed datasets: If the data used for training is incomplete or does not cover a wide range of potential threats, the AI may miss critical signals or misinterpret innocuous activities as threats.” (Emphasis added.)”

AI & Nuclear Weapons

A U.S. Senate bill would ban AI from involvement in nuclear weapons. (U.S. Army National Guard, Ashley Goodwin, Public domain)

The concern that mistakes by Artificial Intelligence could lead to a nuclear disaster is reflected in U.S. Senate bill S. 1394, entitled the Block Nuclear Launch by Autonomous Artificial Intelligence Act of 2023. The bill

“prohibits the use of federal funds for an autonomous weapons system that is not subject to meaningful human control to launch a nuclear weapon or to select or engage targets for the purposes of launching a nuclear weapon.

With respect to an autonomous weapons system, meaningful human control means human control of the (1) selection and engagement of targets: and (2) time, location, and manner of use.”

The bill did not get out of the Senate Armed Services Committee. But leave it to NATO to ridicule it. A paper published in NATO Review last April complained that:

“We seem to be on a fast track to developing a diplomatic and regulatory framework that restrains AI in nuclear weapons systems. This is concerning for at least two reasons:

- There is a utility in AI that will strengthen nuclear deterrence without necessarily expanding the nuclear arsenal.

The rush to ban AI from nuclear defenses seems to be rooted in a misunderstanding of the current state of AI—a misunderstanding that appears to be more informed by popular fiction than by popular science.” […]

The kind of artificial intelligence that is available today is not AGI. It may pass the Turing test — that is, it may be indistinguishable from a human as it answers questions posed by a user — but it is not capable of independent thought, and is certainly not self-aware.”

Essentially NATO says that since AI is not capable of thinking for itself [AGI] there is nothing to worry about. However, highly intelligent humans who are capable of thinking for themselves make errors, let alone a machine that is dependent on human input.

The paper argues AI will inevitably improve the accuracy of nuclear targeting. Nowhere in the document are to be found the words “error” or “mistake” in singular or plural.

Grok combined two Laurias into one. There are 47 population centers in the United States with the name Moscow. Just sayin’. (I know, the coordinates are all different.)

But the NATO piece doesn’t want diplomatic discussion, complaining that, “The issue was even raised in discussions between the United States and China at the Asia-Pacific Economic Cooperation forum, which met in San Francisco in November (2023).”

The paper concluded, “With potential geopolitical benefits to be realised, banning AI from nuclear defences is a bad idea.”

In a sane world we would return to purely human interactions in our day- to-day lives and if war can’t be prevented, at least return to more painstaking, human decisions about whom to target.

Since none of this is going to happen, we had better hope AI vastly improves so that comedy doesn’t turn into tragedy, or even worse, total catastrophe.

Joe Lauria is editor-in-chief of Consortium News and a former U.N. correspondent for The Wall Street Journal, Boston Globe, and other newspapers, including The Montreal Gazette, the London Daily Mail and The Star of Johannesburg. He was an investigative reporter for the Sunday Times of London, a financial reporter for Bloomberg News and began his professional work as a 19-year old stringer for The New York Times. He is the author of two books, A Political Odyssey, with Sen. Mike Gravel, foreword by Daniel Ellsberg; and How I Lost By Hillary Clinton, foreword by Julian Assange.

Please Support CN’s

Winter Fund Drive!![]()

Make a tax-deductible donation securely by credit card or check by clicking the red button:

More/Source: https://consortiumnews.com/2025/01/10/the-limits-of-ai-2/